一个KV存储的MongoDB使用场景

有这样一个业务场景,数据量大(10T以上),数据格式是一个KV,value长度较大(100KB以上),QPS不高,读写延迟要求在5ms以内,由于内存有限,无法使用Redis/CouchBase这样的全内存KV数据库,需要寻找一个合适的数据库。

MongoDB作为一个文档数据库,可以用形如{ _id: key, field0: value }的文档存储一个KV。

当然,这里的一条数据只有两个field,对于value长度小但field数量多的大体积文档,并没有测试。

机器/系统/数据库配置

- CPU Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz

- 内存 384GB

- 磁盘 INTEL SSDSC2BB800G6R

- 操作系统 CentOS release 6.7 (Final)

- 内核版本 2.6.32-573.el6.x86_64

- 文件系统 xfs (rw,noatime,nodiratime,inode64,nobarrier)

- IO scheduler noop

- readahead 256

- cacheSize 30GB

测试工具用的是YCSB,workload配置如下,数据量是100GB,文档包含两个field(_id和field0,field0的value长度是100KB),数据随机访问(uniform),OPS 200 读写比例1:1

|

|

MongoDB cacheSize是30GB,80%以上触发eviction,正常有24GB数据加载到内存,缓存命中率理论值是24%,经测试,实际cache命中率是22%左右,基本吻合。

缓存命中率计算方法,观察测试前后有多少数据加载到内存,计算公式 Delta(db.serverStatus().wiredTiger.cache.“bytes read into cache”)/100KB/operationcount

另外做了热度访问测试(requestdistribution=zipfian),缓存命中率是50%

因为机器物理内存很大,Linux系统cache不可忽略,定期清除cache,测试结果更符合实际。

|

|

针对此场景的配置优化

-

journal日志

记录写请求,场景中写入10MB/s,将journal放到其他物理卷,减少对数据盘的IO占用。

Journal日志默认在storage.dbPath下,不支持指定其他目录,可以通过对symbol link的方式来解决,为storage.dbPath下的Journal目录创建软连接。# mv /path/to/storage/dbpath/journal /path/another/ # ln -s /path/another/journal /path/to/storage/dbpath/ # chown -h mongod:mongod /path/to/storage/dbpath/journal -

Transparent Huge Pages (THP)

经测试,开启与否对性能几乎没有影响,遵从官方建议,禁用之

-

readahead

经测试,开启磁盘预读,读写性能有一定提升,需要注意的是,重设readahead后需要重启数据库

// 查看所有设备情况 # blockdev --report // 查看readahead # blockdev --getra /dev/sdx // 设置readahead,单位是512Bytes,256即128KB # blockdev --setra 256 /dev/sdx

关于磁盘预读,先看一下官方文档

https://docs.mongodb.com/manual/administration/production-notes/

For the WiredTiger storage engine:

- Set the readahead setting to 0 regardless of storage media type (spinning, SSD, etc.). Setting a higher readahead benefits sequential I/O operations. However, since MongoDB disk access patterns are generally random, setting a higher readahead provides limited benefit or performance degradation. As such, for most workloads, a readahead of 0 provides optimal MongoDB performance. In general, set the readahead setting to 0 unless testing shows a measurable, repeatable, and reliable benefit in a higher readahead value. MongoDB Professional Support can provide advice and guidance on non-zero readahead configurations.

For the MMAPv1 storage engine:

- Ensure that readahead settings for the block devices that store the database files are appropriate. For random access use patterns, set low readahead values. A readahead of 32 (16 kB) often works well. For a standard block device, you can run sudo blockdev –report to get the readahead settings and sudo blockdev –setra

to change the readahead settings. Refer to your specific operating system manual for more information.

文档表示,对于WiredTiger存储引擎,建议禁用预读(readahead设置为0)。大多数场景下MongoDB是随机访问磁盘,设置较大readahead值有利于顺序IO,提升有限,甚至影响性能。除非通过测试,确定可以带来可衡量、可重现、稳定的性能提升。同时给自家的有偿咨询服务打了一波广告,很6。

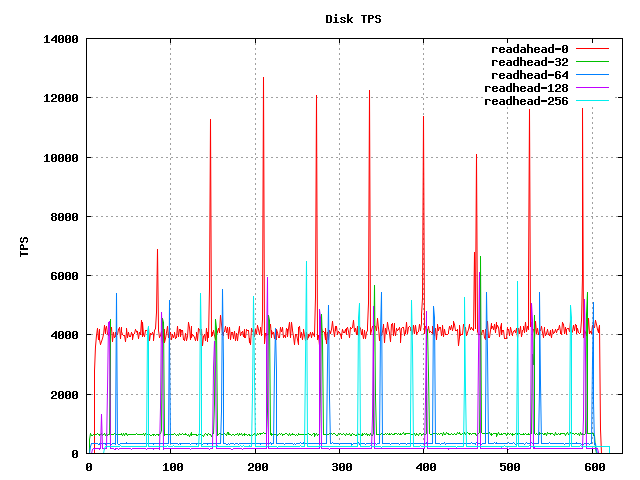

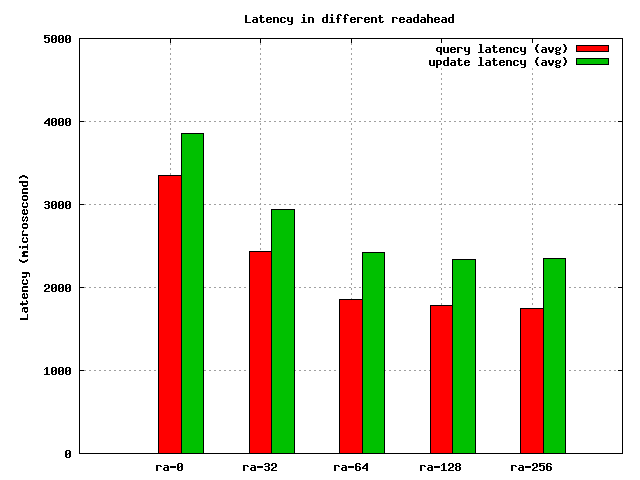

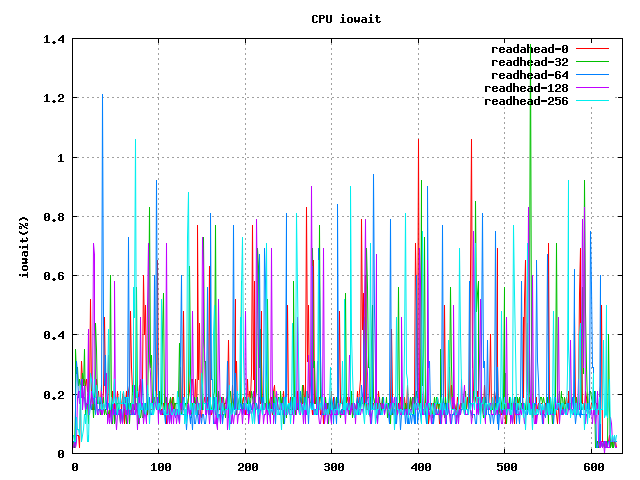

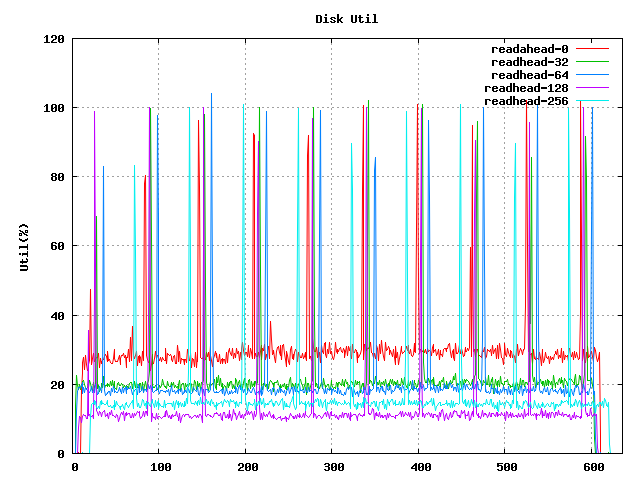

记录了readahead值分别在0/32/64/128/256下的读写延迟和负载,测试结果显示,禁用预读,读写延迟较高;启用预读,MongoDB读写性能在readahead-64时趋于稳定,在readahead-128时更佳,表现为磁盘TPS/利用率/cpu iowait相对更低。

- Latency

- CPU iowait

- Disk Util

- Disk TPS